Hacking Health Care

Jenna Wiens, PhD, an assistant professor of computer science and engineering at U-M, might add extra years to your life, thanks to one of her algorithms.

Jenna Wiens isn’t a medical doctor. But someday, her work might save your life. It won’t be because she developed a new medicine or invented a revolutionary surgical procedure. Instead, you might owe your extra years to one of her algorithms.

Wiens, an assistant professor of computer science and engineering at U-M, is one of an army of data scientists and other engineers who are descending on healthcare to tackle what could be the most massive data science puzzle the world has ever seen: a movement to transform medicine by harnessing information about patients much more effectively. The effort, known broadly as precision health, is expected to help doctors customize treatments to individual patients’ genetic makeup, lifestyle and risk factors, and predict outcomes with significantly higher accuracy.

One major branch of precision health is the development of big-data tools to customize treatments. Experts envision a future in which doctors and hospitals can draw on a web of constantly churning analytical tools that mash up data from a huge variety of sources in real time – for instance, your electronic health record, genomic profile, vital signs and other up-to-the-moment information collected during a hospital stay or via a wearable monitor. It could give doctors and hospitals the ability to make meticulously informed decisions based on an analysis of your entire medical history, from birth to right now.

Researchers like Julia Adler-Milstein, PhD, an assistant professor at the U-M School of Information and School of Public Health, say that in some ways, today’s move to data-driven medicine is similar to the move to data-driven retailing that took hold over a decade ago. Online sellers like Amazon have compiled exhaustive data stockpiles, analyzing years of browsing and purchase history for millions of customers, then using that past data to predict what you’ll buy next. It’s obsessively detailed, computationally advanced, and sometimes a little creepy – and it has revolutionized how consumer goods are sold. If a computer can analyze your purchase data to predict what you’ll buy next, why can’t it analyze your medical data to predict whether you’ll get sick? It’s an enticing question for doctors and data scientists alike. But, as with most things in healthcare, it’s complicated.

Health data is going to be valuable in ways we don’t even understand yet. Jenna Wiens, Assistant Professor of Computer Science and Engineering, U-M

“Amazon’s decisions are tightly intertwined with data. But healthcare has only started to evolve the model of decisions based on physician expertise,” Adler-Milstein explains. “That has always been exciting to me, and learning how to integrate data and information technology pieces into the complexity of healthcare is especially fascinating.”

Perhaps it’s no surprise that bringing healthcare data into the 21st century is tougher – far tougher – than building an algorithm that suggests new socks to go with your new shoes. The stakes are higher. The regulations are tighter. The costs are greater. And there’s more data. So much more data.

Eric Michielssen, PhD, the Louise Ganiard Johnson Professor of Engineering at U-M, predicts that the amount of healthcare data generated annually worldwide will rise to 2,300 exabytes (2.3 trillion gigabytes) by 2020. And new data sources are coming online all the time, from wearable sensors to new kinds of imaging and video data. It’s predicted that healthcare data will eventually push past traditional scientific data hogs like astronomy and particle physics. Much of this is due to genomics data, which gobbles up so much space that there isn’t a cloud big enough to hold it – scientists are largely limited to on-site data storage. One researcher even joked that “genomical” might soon overtake “astronomical” as a term for incredibly large things. And much of this data is piling up across a fragmented hodgepodge of systems that were never meant to work together, at hospitals and other healthcare players that are often reluctant to share it.

Syncing up an ocean of fragmented, inconsistent data with the advanced analytics and databases that will drive precision health knowledge seems like an impossible problem. But maybe that’s what makes it so attractive to engineers. Today, more of them are working in healthcare than ever before, at U-M and elsewhere. Biomedical experts, data scientists, electrical and computer science engineers are dedicating their careers to it, and healthcare providers, tech companies and the government are investing massive amounts of resources. With the challenge ahead of them, they’ll need it.

Fighting Infection with Data

Wiens, for her part, is working on front line tools – the system of analytics and other digital machinery that will turn raw data into knowledge that doctors can use to make better decisions. Among her projects is a tool that predicts which hospital patients are at risk of developing a life-threatening intestinal infection called Clostridium difficile, or C. diff. The disease has evolved into an antibiotic-resistant superbug at hospitals, where it affects an estimated 500,000 patients per year in the United States alone.

The actions that can slow the spread of C. diff can be surprisingly simple, like moving high-risk patients to private rooms or limiting their movement around the hospital. The trouble is, doctors don’t have a good way of figuring out who’s at risk.

Wiens’ team is solving the problem with machine learning, a technology that’s already widely used in online marketing and retailing and is gaining ground in precision health. It enables computers to “learn” by combing through vast pools of data, using elaborate mathematical algorithms to compare pieces of information and look for obscure connections. They then use those connections from past data to make predictions about the future.

Data is the raw material that makes tools like this possible. And the team gained access to a lot of it at the project’s outset: the entire electronic health record for nearly 50,000 hospital admissions at a large urban hospital. The data also included demographic information and detailed records of each hospital stay: vital signs, medications, lab test results, even their location in the hospital and how prevalent C. diff was in the hospital during their stay.

Armed with this cache of data, they set out to build a tool that could estimate a patient’s risk of developing C. diff by going far beyond known risk factors and analyzing thousands of variables in a way that humans can’t. It would look for relationships between variables, calculate how those relationships change during the course of a patient’s stay, and turn it all into a numerical score that estimates an individual patient’s probability of becoming infected with C. diff during their hospital stay.

It was a tall order, particularly because of the complex way the risk factors change during the course of a hospital stay. So the team used what are called multi-task learning techniques. Multi-task learning breaks a single task into several individual problems, looks for common threads and connections between each problem, then combines them into a single model.

The team includes dozens of experts on infectious disease and machine learning; its founding members include John Guttag, PhD, a professor in the MIT Department of Electrical Engineering and Computer Science and Eric Horvitz, PhD, MD, Technical Fellow and Managing Director at Microsoft Research. They started by crunching the patient data into binary variables that a computer can understand, ending up with around 10,000 binary variables per patient, per day. They then broke the task into six individual machine learning problems (see equation).

Doing the Math

Wiens’ team used the optimization problem above to learn a predictive model that calculates a patient’s daily likelihood of contracting a C. diff infection during a hospital stay.

They used multi-task learning to calculate a set of risk parameters (θ) by analyzing the electronic health records from a large set of hospital stays. Patient data (x) included a variety of clinical information — some of which may change over time, such as patient location, vitals and procedures — and some of which remains the same, such as patient demographics and admission details. C. diff infection status was represented by y. The expression considers each day of the stay (t), taking into account that a person’s status at admission matters less as the patient spends more time in the hospital. To reflect this, the risk parameters vary over the course of a hospital stay.

Wiens’ group calculated the set of risk parameters (θ) for different time periods simultaneously by finding the set of values that minimize the objective function given above. Then, they used these parameters to build a model that produces a daily risk score, estimating a patient’s probability of acquiring C. diff during a particular hospital stay.

Finally, they set the computer to work trawling through the data to build (or “learn”) a model. When the dust cleared, their learning algorithm found connections between C. diff and everything from patients’ specific medication history to their location in the hospital. It was a model that no human could have come up with, and a far cry from the quick bedside analysis that doctors rely on today.

Testing showed that their model was more effective at predicting which patients would get C. diff than current methods, correctly classifying over 3,000 more patients per year in a single hospital. Perhaps most importantly, it predicted who was at risk nearly a week earlier, providing more time to identify high-risk patients and take potentially life-saving action.

Wiens says the computing power needed run the model at a hospital is minimal. It’s already being integrated into one major hospital’s operations and could be rolled out at others in as little as a year, crunching actual patient data in real time to identify high-risk patients and alert doctors.

Wiens’ model is just one of many data-driven analytical tools that doctors may one day use to make better decisions and tailor treatments and medications to individual patients. Similar tools could predict which patients will suffer complications from heart surgery, more precisely target medications based on genomic and lifestyle data, and even predict the progression of complex diseases like Alzheimer’s and cancer.

“Health data is going to be valuable in ways that we don’t even understand yet,” Wiens said. “It’s going to move us away from a one-size-fits-all healthcare system and toward a model where physicians make decisions based on data collected from you and millions of others like you.”

But getting there isn’t just a matter of doing the math. It’ll take a new level of collaboration between data scientists, hospitals and others in the healthcare community. And in the world’s most fragmented healthcare system, that could be even tougher than it sounds.

Good Data is Hard to Find

To build the kind of health system Wiens and others envision, we’ll first need a better health data system. And that’s an area where the go-go world of computer science collides messily with the more cautious culture of medicine. Hospitals are collecting more data than ever, but most of it is sitting idle on proprietary record systems that weren’t designed to talk to each other. And for healthcare providers, sharing comes with risks: they worry about giving away secrets to competitors, angering patients, running afoul of vague privacy regulations and a variety of other pitfalls.

But that data is the lifeblood of the work that researchers like Wiens are doing. There are some large storehouses of data, and in fact U-M has one of the largest stores of genomic data in the world. But there’s no central source of broad, widely accessible data. And that limits what researchers can do.

Sometimes scientists and doctors don’t know what to look for, and I think that’s this millennium’s challenge. Barzan Mozafari, Assistant Professor of Computer Science and Engineering, U-M

Wiens believes that the pace of discovery could increase dramatically if more data were publicly available. It would enable multiple researchers to use the same set of data, leading to more consistent research results and making it easier for researchers to verify each other’s findings. It would also mean that research topics would less often be limited by the types of data available.

“My work is about taking data and turning it into knowledge, and sharing data publicly would be such a game changer for the field,” she said. “There’s so much data out there, but we don’t have access to the vast majority of it.”

Fighting Disease with Data

More and more researchers are finding ways to use data to keep people healthy. Here are a few examples of the latest data-driven health projects at U-M.

MANAGING BIPOLAR DISORDER

A proposed smartphone app could listen to the voices of bipolar disorder patients, analyzing subtle changes in voice and speech to predict episodes before they happen. Emily Mower Provost, PhD, assistant professor of electrical engineering and computer science, Satinder Singh Baveja, PhD, professor of electrical engineering and computer science and Melvin McInnis, MD, Thomas B and Nancy Upjohn Woodworth Professor of Bipolar Disorder and Depression at University of Michigan Medical School

OUTSMARTING THE FLU

A DARPA-funded research project is using big data to determine why some people who are exposed to germs like the flu get sick, while others stay healthy. It could help doctors better understand the immune system and protect patients from disease. Al Hero, PhD, John H. Holland Distinguished University Professor of Electrical Engineering and Computer Science

PREVENTING HOSPITAL INFECTIONS

A predictive tool can spot patients that are at high risk for a potentially deadly intestinal infection called Colostridium Difficile (or C. diff), enabling doctors to take measures that can keep them safe. Jenna Wiens, assistant professor of Computer Science and Engineering

MAKING ABDOMINAL SURGERY SAFER

A predictive model can analyze abdominal core muscle size to predict which patients are at high risk of complications from abdominal surgery. The tool could help physicians pinpoint risks and take preventative measures to keep patients safe. Stewart Wang, MD, endowed professor of burn surgery and professor of surgery at University of Michigan Medical School

Much of this awkwardness can be chalked up to differences in culture, says Hitinder Gurm, a U-M associate professor of internal medicine who has spent years straddling the line between data and medicine. He collaborates with Michigan Engineering’s computer science professors on big-data tools for healthcare.

“There’s a philosophical difference between what engineers consider research and what physicians consider research,” he said. “Physicians want to look at an individual application, determine to a great degree how effective it is, see if we can improve it. To us, that’s research. But computer scientists aren’t excited about this. To them, research is developing a completely new way to do something. We need to figure out a way to bridge that gap.”

Building the Bridge

The Precision Medicine Initiative (PMI), first announced by the White House in early 2015, aims to provide researchers and the public with a broad and deep pool of anonymized, widely accessible health data. Spearheaded by the National Institutes of Health, PMI is envisioned as a collective of a million or more volunteers who would provide a staggering variety of medical data: genomic profiles, electronic health record data, lifestyle information like exercise and diet habits – even blood and other biological samples, which would be collected and stored in a central facility.

The project’s creators believe that the volunteer nature of the project could allay hospitals’ and patients’ privacy concerns by getting permission from each participant before they volunteer any information – they’d be able to volunteer as much or as little information as they choose. By enabling volunteers to donate their existing medical record data through their insurance provider or hospital, they plan to tap into the stockpile of data that’s sitting unused at hospitals and other healthcare players. They’d also recruit new volunteers directly, who could take a medical exam and donate the captured information to the project.

If it works as planned, the PMI cohort could provide the sort of deep, detailed and consistent data that researchers like Wiens pine for. Funded with an initial grant of $130 million for fiscal year 2016, the project is in its early stages – a group of health care providers, medical records software companies and other experts are working to find the best ways to move forward.

Dr. William Riley, PhD, the director of the office of behavioral and social science research at the NIH and a clinical psychologist by training, says the project is already leading to more collaboration between doctors and data scientists, but there’s still a long road ahead.

“These aren’t technical issues, they’re logistical and policy issues – smoothing the way for hospitals, getting electronic health record vendors to make their new versions compatible. That takes time, but we’re headed in the right direction.”

U-M’s School of Public Health, Institute for Social Research and the University of Michigan Health System are playing a role in solving some of those issues, working with Google and other partners to design some of the digital tools that will make data available to researchers and participants while keeping it safe. Goncalo Abecasis, DPhil, the Felix E. Moore Collegiate Professor of Biostatistics and director of the Biostatistics Department in the U-M School of Public Health, says that determining precisely who should have access to which data, and presenting that data in a way that makes sense, will be key to U-M’s role.

“The goal is to make sure scientists can ask questions about the role of particular genes,” he said. “Google’s expertise lies in making the database scalable and managing access, while our expertise lies in presenting data in a way that’s easy to use and intuitive to researchers.”

The project’s goals are lofty, and Riley doesn’t pretend it will be easy to get a massive and disparate group of doctors, hospitals, software providers and others to work together. But he thinks they recognize that the benefits are too big to ignore.

“All of a sudden, this healthcare data is in a form that engineers and computer scientists can use,” he said. “Clinical researchers are beginning to see the value in that, but there’s still a gap in the way different people think about answering these questions.”

Making Data Even Bigger

What could researchers do with the kind of dataset that Riley and the NIH are working to create? More. A lot more. In fact, computer scientists like U-M’s Barzan Mozafari, PhD, believe that helping doctors find better answers is just the beginning. They say that data could also help researchers ask better questions. Mozafari, an assistant professor of computer science and engineering, is working to build new database systems to churn through enormous stores of data and find unseen connections that could lead to new discoveries and whole new branches of medical research, in everything from cancer to pharmaceuticals.

“Sometimes scientists and doctors don’t know what to look for, and I think that’s this millennium’s challenge, the million-dollar question,” he explains. “We’re trying to build a new generation of intelligent systems that are proactive, that can feed you hypotheses and find correlations. You can’t replace scientists with machines, at least not soon, but the idea is that the machine should be able to suggest things to the scientist.”

The trouble with mining healthcare data, says Mozafari, is that today’s databases aren’t designed to do a very good job of it. They’re made for the business world, where data tends to be cleaner and researchers often know precisely what they’re looking for.

You can’t replace scientists with machines, at least not soon, but the idea is that the machine should be able to suggest things. Barzan Mozafari, Assistant Professor of Electrical Engineering and Computer Science, U-M

Healthcare researchers often can’t use existing data science approaches, largely because there’s just so much healthcare data. It takes conventional systems too long to chew through it, and research has found that even short delays can disrupt the research process, causing a noticeable lag in creativity and idea generation. And the amount of data is growing faster than Moore’s Law, so better processors aren’t likely to provide a solution.

Also, healthcare data is messy. It contains thousands of variables, and many of them are often missing, incomplete, unreadable or recorded differently from one healthcare provider to the next. This trips up most databases, preventing them from providing good answers.

To get past these problems, Mozafari is designing a new kind of database system called “Verdict.” It uses what’s called “approximate queries.” Instead of plowing through a massive dataset with a single query to find the most accurate answer, it conducts several, slightly less precise searches simultaneously. It then compares and carefully combines these queries into a single answer. The resulting answer is 99 percent as accurate as a traditional approach, but can be done up to 200 times faster. That could mean getting an answer in seconds instead of hours.

Comparing multiple queries also helps to compensate for missing or incomplete data, providing better answers with less irrelevant information. And the system learns from past queries, so it becomes more and more accurate as the research process wears on.

Mozafari says these speed improvements could transform the research process, powering automated systems that sift through hundreds or thousands of hypotheses looking for obscure connections and bits of information that no human could find.

“We’re trying to hide the database plumbing so that it doesn’t hinder the discovery process,” he said. “When researchers can simply point the system at a lake of data, it’s going to take us far beyond where we are today.”

Mozafari is optimistic about the future relationship between engineers and healthcare, though he cautions that countries with more centralized healthcare systems have a head start in building a cohesive health data infrastructure.

“There are more well-managed, more efficient and more successful health systems in Europe. But we have many of the best data science researchers here in the U.S. They come from all over the world to work here,” said Mozafari. “This is a huge opportunity. If we harness it.”

A Petabyte-sized Problem: Managing the Deluge of Genomic Data

Genomic data is a key piece of the precision health puzzle — it can help researchers understand why diseases strike certain people, provide insight into how a disease works and lead to more targeted medications. And researchers are getting better and better at using it.

“The amount of data we can collect and process is growing four-fold every year,” said Goncalo Abecasis, the Felix E. Moore Professor of Biostatistics. “And unlike in the past, we can now look at entire human genomes. Ten to fifteen years ago, we could only look at a few genes, so we had to ask very narrow questions and there was a lot of guesswork. Today we can see the entire genome and use that to look for correlations with disease.”

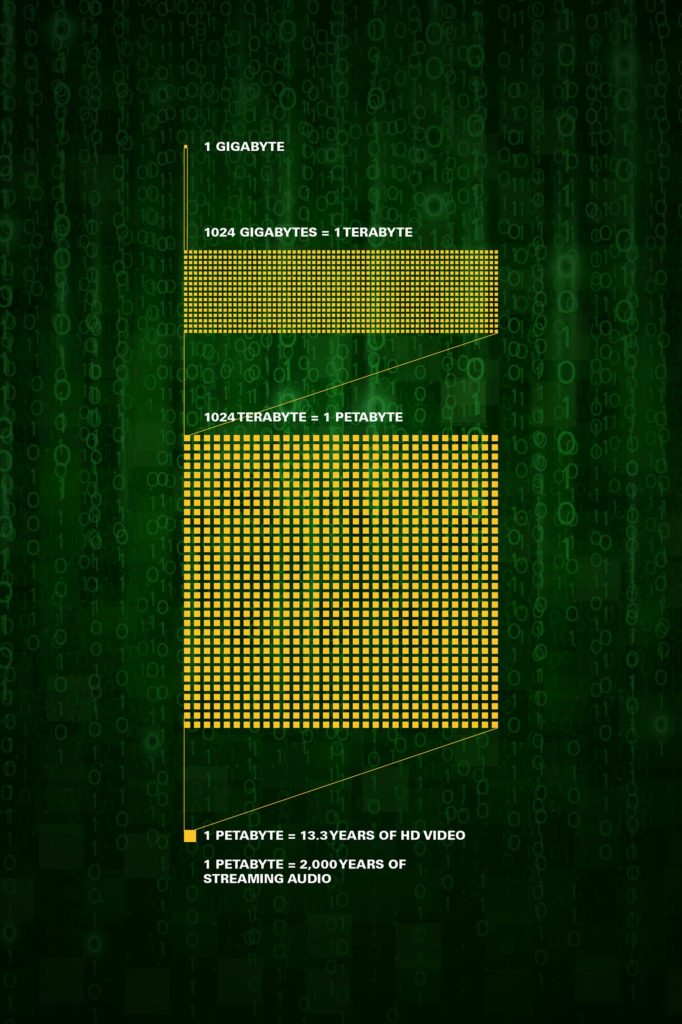

But genomic data is ginormous — a single human genome takes up about 100 gigabytes of memory. That adds up fast when researchers are working with large numbers of patients, creating a vexing challenge for data scientists.

This graph displays the digital storage capacity of the petabyte.

“Most campuses don’t know what to do with this data,” said Eric Michielssen, the Louise Ganiard Johnson Professor of Engineering at U-M. “If you have 10,000 patients, the data volume associated with their data is petabytes. That’s millions of gigabytes. The storage and transport costs are incredible.”

The costs are so great, in fact, that making copies of genomic data available to researchers often isn’t practical — storing that new copy could cost hundreds of thousands of dollars.

The Michigan Institute for Data Science (MIDAS) is working to find a better way, enabling researchers to use and share data without creating multiple copies. The project’s dual focuses are on finding less expensive ways to collaborate and on building new databases that can keep patient information safe even as it’s made available to more and more researchers. You can learn more about their efforts at midas.umich.edu.

On the clinical side, Abecasis is working to gather secure, shareable genomic data for large numbers of patients. He’s heading up two initiatives that are making more genomic data available to the researchers who need it.

The Michigan Genomics Initiative enables non-emergency surgery patients at the University of Michigan Health System to volunteer their genomic and electronic health record data, making it part of a data repository that can be used by researchers. The initiative has captured data from over 32,000 patients since it began; Abecasis says that 80 percent of eligible hospital patients choose to participate.

Genes for Good uses social media to collect health, lifestyle and genomic data from online volunteers. It also enables participants to compare their health information to other participants and use it to make more informed health decisions. Abecasis says the program has over 10,000 participants thus far. The program is free, and any United States resident who is 18 or older and has a Facebook account can participate. To sign up, visit www.genesforgood.com.